“Some people worry that artificial intelligence will make us feel inferior, but then, anybody in his right mind should have an inferiority complex every time he looks at a flower.”Alan Kay, computer scientist

Computer systems are beginning to do things we used to believe required human thought: dealing with uncertainty; learning from experience; making predictions; interpreting language in a complex, contextual manner. Some systems called neural networks are even patterned after the human brain. These emerging forms of artificial intelligence (AI) can operate on a scale that exceeds human capacity, unlocking the potential of the enormous amounts of data we are generating. As with any technological revolution, the coming era of AI holds both promise and peril. AI algorithms are powering breakthroughs that may fundamentally improve the human condition, but because AI can “think” faster, cheaper, and (in many cases) better than humans, it is expected to displace many professional jobs. AI offers museums the practical tools they need to manage their own swelling data sets, as well as new avenues for creativity.

In 1950, Alan Turing published a paper titled “Computing Machinery and Intelligence” in which he presented a framework for judging whether a machine can think. Turing, who is best known to the public for his work cracking the German “Enigma” code during World War II, proposed what he called the Imitation Game (also known as the Turing Test) in which an interrogator, asking questions of hidden respondents, tries to guess whether they are man or machine based on their replies.Fast forward to spring 2016, when a computer science professor at Georgia Tech revealed to his class that one of their online teaching assistants that semester had, unbeknownst to them, been a kind of computer program called a “chatbot.” Some students had been suspicious, if only because the program, dubbed “Jill Watson,” responded so promptly and efficiently to their questions. Still, overall it seems that Jill herself earned a passing grade on Turing’s Test.

Chatbots like Jill Watson use AI to simulate human conversation, drawing on a host of programs and interfaces that have been created based on Turing’s work. Natural language interfaces enable computers to accept normal human speech as input rather than requiring specialized language, syntax, or terminology. “Machine learning” gives programs the ability to improve based on experience, refining their performance as they test algorithmic predictions against real-world outcomes. These and other recent advances in hardware and software have given rise to what’s being called “cognitive computing”—programming so sophisticated and adaptable that it mimics the function of the human brain.

AI programs can do far more than chatter. IBM has invested more than $1 billion over the past two years in its Watson Group, a division devoted to applying the power of cognitive computing and big data to health care, retail, banking, and insurance. IBM’s artificial intelligence program, called IBM Watson, made headlines when it became the 2011 Jeopardy champion, beating two of the game’s best human players, and continues to present a warm relatable side to the public as it invents new recipes, dabbles in fashion, and edits movie trailers. On a more serious note, Watson is an ace diagnostician, a cybersecurity expert, and savvy investment analyst. And now (coming full circle), IBM is using Watson to jump into the chatbot market itself, partnering with the popular workplace messaging app Slack to create a superior chatbot—one that can infer emotion from speech.

IBM is just one player in a rapidly expanding pool of government and private investment in AI: Apple, Microsoft, Facebook, GE, Google, and Amazon are in the game as well. These companies, starting from very different places—hardware, software, appliances, social media, and retail—are all using AI to take their work to a new level. Research is already starting to tackle the next frontiers. We are on the cusp of creating computers that vastly accelerate the speed of calculations and the density of data storage by using quantum scale systems such as atoms, photons, and nuclear spin to register information. These quantum computers could fuel the development of neural networks and deep learning—forms of artificial intelligence that are particularly good at pattern recognition. And these tools could in turn enable us to design an artificial general intelligence (AGI) capable of operating across a broad range of cognitive tasks.

WHAT THIS MEANS FOR SOCIETY

AI has the potential to fuel economic growth and make the world better in a host of ways. AI-guided autonomous vehicles could have a massive effect on public transportation, reducing congestion and pollution while increasing accessibility for the elderly and disabled. AI can improve health care, holding out the promise of personalized medicine and improved diagnostics. AI may be able to make inroads on intransigent social problems that humans alone haven’t been able to solve; researchers are using machine learning to map global poverty with unprecedented accuracy; a Stanford undergrad is programing a chatbot to help people who are homelessaccess support. But the most exciting applications for AI may be in the realm of education. IBM Watson and its kin make possible the kind of learning Neal Stephenson envisioned in The Diamond Age. That novel’s dystopian society, marred by huge cultural and economic divides, is revolutionized by the invention of AI-powered primers that serve as personalized, responsive mentors to children, creating a social revolution in which high-quality education becomes accessible to all. And if that sounds too much like a sci-fi scenario, take note that the Defense Advanced Research Project Agency’s Educational Dominance program is already using AI-powered “digital tutors” to speed up training of Navy recruits.

All technological revolutions result in massive social and economic change. Historically, the most profound disruptions have been around labor, and AI is no exception, promising to reshape cognitive work as radically as robots transformed manufacturing in the 20th century. Researchers at Oxford University project that AI will contribute to the loss of up to 47 percent of US jobs in the next 20 years, many of them professional jobs that traditionally require advanced degrees. Some law firms have already begun to use “artificially intelligent attorneys” to research legal issues, and one report predicts that by 2030 the traditional structure of the legal profession will collapse, as legal bots take on the majority of “low-level economy work.” On the other hand, even as AI erodes the value of cognitive work, it may increase the value of human judgment. In this scenario, human professionals will partner with AI to enhance their abilities. One such partnership that’s already proven successful: AI and doctors working to diagnose breast cancer with 99.5 percent accuracy—better than either humans or machines working alone.

As we give AI power over the systems that shape our lives, society will need to work through a host of associated legal and ethical issues. For decades, students of moral philosophy have wrestled with the Trolley Problem, a conundrum that challenges them to decide what they would do if, as the driver of a runaway train, they were forced to choose between running down and killing one set of people or another. The programmers of self-driving cars have to write an algorithm that specifies how to behave in real-world versions of that problem. The Pentagon is laying the groundwork for so-called autonomous weapons—robots and drones empowered to take lives without direct human oversight. More subtly damaging is the ability of AI algorithms to inherit the biases of their programmers and “learn” bias from the broader world, entrenching prejudices such as racism or gender bias into seemingly objective processes. How can we make AI programs transparent and accountable for their outcomes?

The rise of AI further challenges what and how we teach. The development of slide rules, then calculators, made it possible for kids to spend less time learning math as a process, and more time using it as a tool for higher reasoning. When schoolchildren use AI as naturally as any other technological tool, will teachers be freed to emphasize higher-level functions such as judgment and creativity and emotional skills such as empathy and compassion?

WHAT THIS MEANS FOR MUSEUMS

As AI becomes more effective and affordable, it will become part of the standard toolkit of museums seeking to enhance their business practices. Rather than displacing staff, AI can give smaller museums that can’t afford dedicated specialists access to AI-

powered legal services, marketing, communications, and data analytics. Even exploiting the potential of AI itself will not necessarily require AI-specific expertise, as we develop plug-in applications for the technology.

The Brooklyn Museum already uses Natural Language Processing algorithms to assist the work of the behind-the-scenes staff fielding visitor questions via their award-winning ASK App. Imagine a future in which any visitor to any museum can lob questions at an AI expert that mines both the museum’s own collections data and the mass of scholarship available on the Web.

AI will be an essential tool for museums managing the massive scale of data in the 21st century. Visual recognition algorithms can unlock the potential of digital image collections by tagging, sorting, and drawing connections within and between museum databases. The museum field is struggling to manage the burgeoning mass of archival material “born digitally” via e-mail and social media. As Rich Cherry, deputy director at The Broad, has pointed out, the scale of the problem is staggering: while the Clinton presidential library has a manageable number of e-mails, President Obama’s library will contain more than a billion. AI may be the only feasible way to make meaning of archives at this scale.

AI is also a powerful tool for making museums and their collections more accessible and more useful to the general public. Natural language interfaces can empower users to mine collections databases without knowing a specialized vocabulary. Programs powered by AI can help the curious surf museum data in playful ways. Front of house, visitors can use evermore accurate translation programs (such as Google’s Translate app) powered by machine-learning algorithms to read exhibit labels and converse with staff.

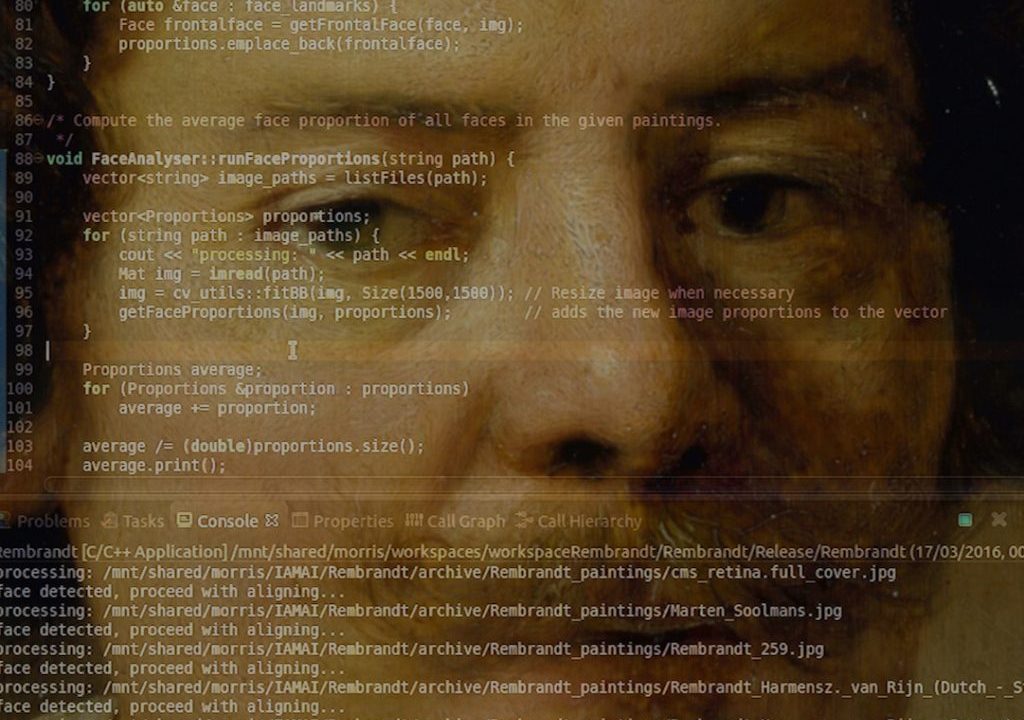

Just as IBM’s Watson can perform some aspects of medical diagnosis or legal research faster and more effectively than humans, it may turn out to excel at some museum-related work as well. Art authentication is an increasingly fraught field, with artist-specific foundations, collectors, and experts tangled over who has the final word over attribution. Some foundations, like the Pollock-Krasner and the Warhol, have ceased doing authentication from fear of lawsuits or other concerns. AI is being recognized as a powerful tool for detecting fakes and forgeries; perhaps it can tackle authentication as well. When it comes down to recognizing what we have previously described as “style”—the ineffable quality that can only be recognized by instinct and training—museums may come to rely on AI as well as the “curatorial eye.”

FURTHER READING PREPARING FOR THE FUTURE OF ARTIFICIAL INTELLIGENCE. EXECUTIVE OFFICE OF THE PRESIDENT, NATIONAL SCIENCE AND TECHNOLOGY COUNCIL, OCTOBER 2016. THIS REPORT SURVEYS THE CURRENT STATE OF AI, ITS EXISTING AND POTENTIAL APPLICATIONS, AND IDENTIFIES THE QUESTIONS IT RAISES FOR SOCIETY AND PUBLIC POLICY.

Artificial Intelligence and Life in 2030. Stanford University, 2016. This report is the first in a series to be issued at regular intervals as a part of the One Hundred Year Study on Artificial Intelligence (AI100). Starting from a charge given by the AI100 Standing Committee to consider the likely influences of AI in a typical North American city by the year 2030, the 2015 Study Panel focuses on transportation; service robots; health care; education; low-resource communities; public safety and security; employment and the workplace; and entertainment.

One thought on “Artificial Intelligence: The Rise Of The Intelligent Machine”