Each year, AAM’s TrendsWatch serves as the launch pad for a longer, deeper exploration of the forces shaping our world. In the following months following the release of the forecasting report, I look forward to emails from museums around the country offering to share the good work they are doing around these themes. Today on the blog, Michelle Padilla and Jane Thaler tell us how they and their colleagues at the Carter are tackling the need for guidelines steering the museum’s use of artificial intelligence.

If this post piques your interest, join me on October 29 & 30 at the Future of Museums Summit to hear from other colleagues on the challenges and opportunities around the use of AI in museums–one of four tracks in this virtual conference. Early Bird Registration is now open.

–Elizabeth Merritt, VP Strategic Foresight and Founding Director, Center for the Future of Museums

The robots are coming for us! Actually, they are already here and have mostly slipped into our daily lives without much fuss . . . until about late 2022 when an artificial intelligence (AI) company launched ChatGPT and, suddenly, AI seemed to be everywhere.

In the beginning

In early 2023, a colleague at the Amon Carter Museum of American Art (the Carter) returned from a conference and shared that there was a lot of talk about how to keep proprietary information safe from AI crawlers. This initial concern led us to a deep dive into AI to gain a better understanding of what it is and isn’t, how staff at the Carter might use it, how they shouldn’t use it, and how to provide them with some basic AI literacy.

We started with immersing ourselves in articles and webinars to get a better feel for the broad scope of AI—the good, the bad, and the ugly—and how it is already being applied in the museum field. What became clear early on was that AI is just another tool in the toolbox, and we started to think about it within the context of a SWOT analysis, or strengths, weaknesses, opportunities, and threats.

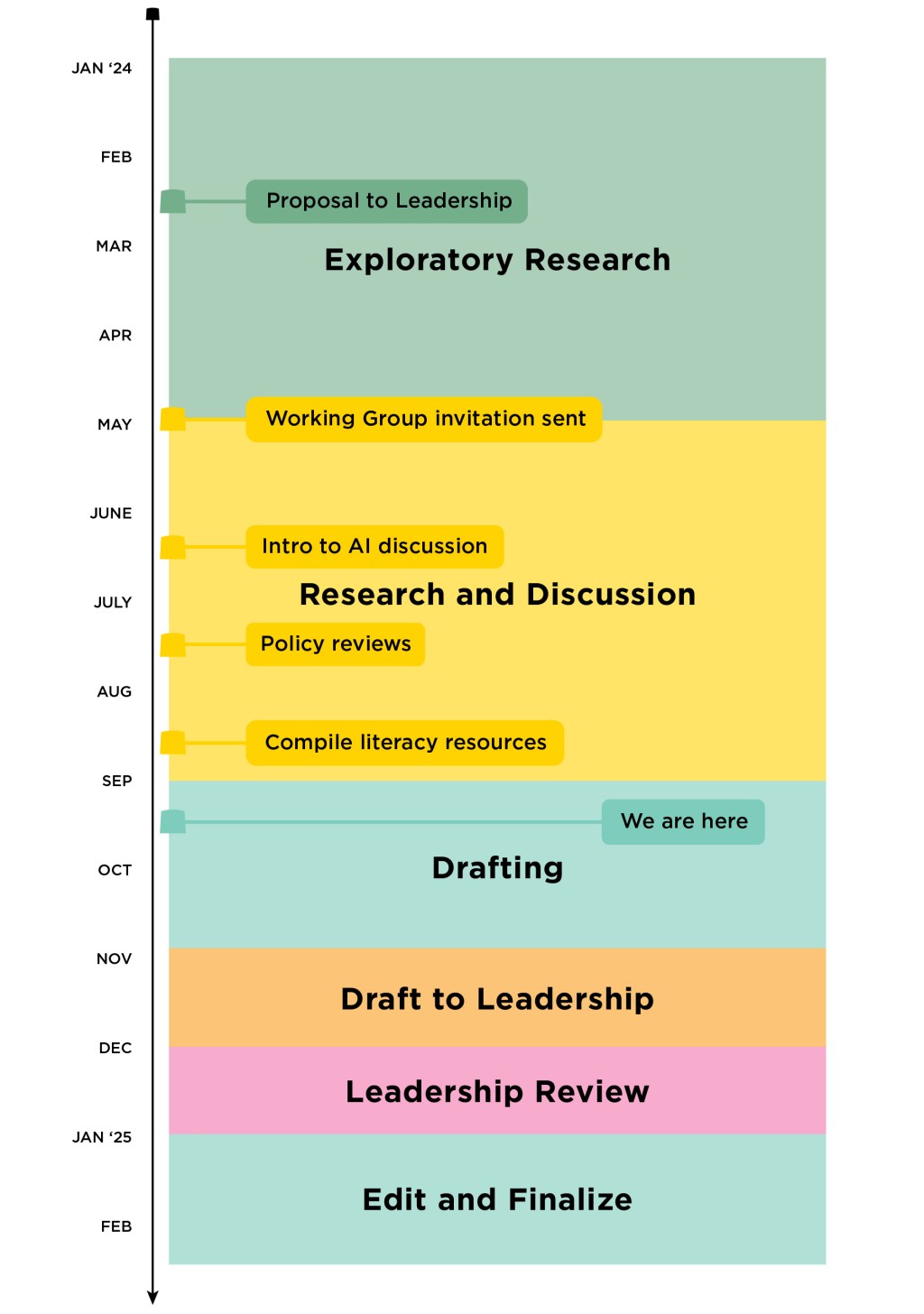

After several months of reading, watching, listening, and thinking, we took a proposal to the Carter’s Leadership Team: to create a cross-departmental working group of staff to develop guidelines for the use of AI tools at the Carter. This was at about the same time that AAM’s TrendsWatch report came out with an article on “AI Adolescence,” which provided reinforcement for our proposal.

The Leadership Team had many questions about AI, including how other museums are using it, how or if staff are currently using it, if a Carter policy should focus on a particular platform, and who should be part of this working group. It was decided to start by holding departmental discussions on how staff are already using AI, if they are, how they think they might use it if the opportunity arose, and how they would never use it. The takeaways from those group discussions were threefold:

- Concerns about privacy, copyright, and authenticity were paramount.

- Current usage of AI was primarily of text or image generation.

- Most staff were not aware of AI applications outside of generative.

Armed with a list of staff who already had an interest in AI technology and others who might be important stakeholders for their departments, our Leadership Team approved a working group of nine people representing Archives, Collections, Development, Education, IT, Marketing/Communications, and Retail.

The AI working group

During our informal conversations with staff before starting the working group, it became clear that reflecting on personal AI use would be a crucial first phase. Many staff members did not realize how frequently they interact with AI in their daily lives, from using voice assistants like Siri and Alexa to relying on recommendation algorithms on streaming platforms. Understanding the ways in which AI tools have already become interwoven into our work platforms was another layer to be explored.

As a working group, we wanted to explore these themes in a way that encouraged discussion while being respectful of varying bandwidths. To do so, we used discussion boards in Basecamp to facilitate asynchronous interaction much like you would see in online pedagogy. The boards were spaces for staff to engage with current trends, share insights, and reflect on their personal views and use of AI.

To guide the discussions, we read three general-knowledge, popular news resources: “This is how AI image generators see the world” and “AI can now create images out of thin air. See how it works.” from the Washington Post; and the Wall Street Journal’s “Beginner’s Guide to Using AI: Your First 10 Hours.” To focus on AI applications in museums, we read two fantastic resources in addition to AAM’s “AI Adolescence” article: AI: A Museum Planning Toolkit, from the collaborative Museums + AI Network, and “Developing responsible AI practices at the Smithsonian Institution.”

Through these fruitful discussions, we built a collective knowledge base as a jumping off point.

The shift from policy to guidelines

Through our readings and discussions, we soon recognized the need to shift our focus from strict policy rules to more flexible guidelines, and also to develop a public statement that affirms the Carter’s commitment to transparency and best practices, along with literacy resources for staff. This decision was influenced by several factors:

- Institution size and digital footprint: As a midsize museum with a comprehensive and competent digital presence, we realized that imposing rigid policy rules might not be practical given the rapidly changing AI landscape. It was essential that we develop guidelines that are adaptable and responsive to new developments in AI technology, and that we support the creative and ethical use of AI tools.

- Collecting focus: Our museum’s focus on art, archives, and library collections, as opposed to scientific or historical collections, shapes our approach to AI. The application of AI in these areas differs significantly, and our guidelines need to reflect these unique considerations.

- Information literacy: We identified a gap in AI literacy among our staff. Enhancing AI literacy therefore became a priority, as it empowers staff to make informed decisions and engage more effectively with AI tools.

To develop our guidelines, we continued our group discussions with close readings of existing resources from leading institutions, sourced by our Head of IT, such as the Museum of Fine Arts, Houston; Shedd Aquarium; Dallas Museum of Art; and digital agency ForumOne. These examples provided valuable insights, pointing us toward crafting guidelines that are both relevant and practical for our context.

Coming soon!

The next phase of our work involves drafting and finalizing our guidelines and public statement on AI use at the Carter. This will include clear, actionable recommendations that reflect our commitment to ethical AI use, transparency, and continual learning. We will also develop comprehensive literacy resources to support staff in understanding and engaging with AI technology.

Our goal is to create a dynamic framework that can evolve alongside advancements in AI, ensuring that the Carter remains at the forefront of innovation while upholding our core values and mission.